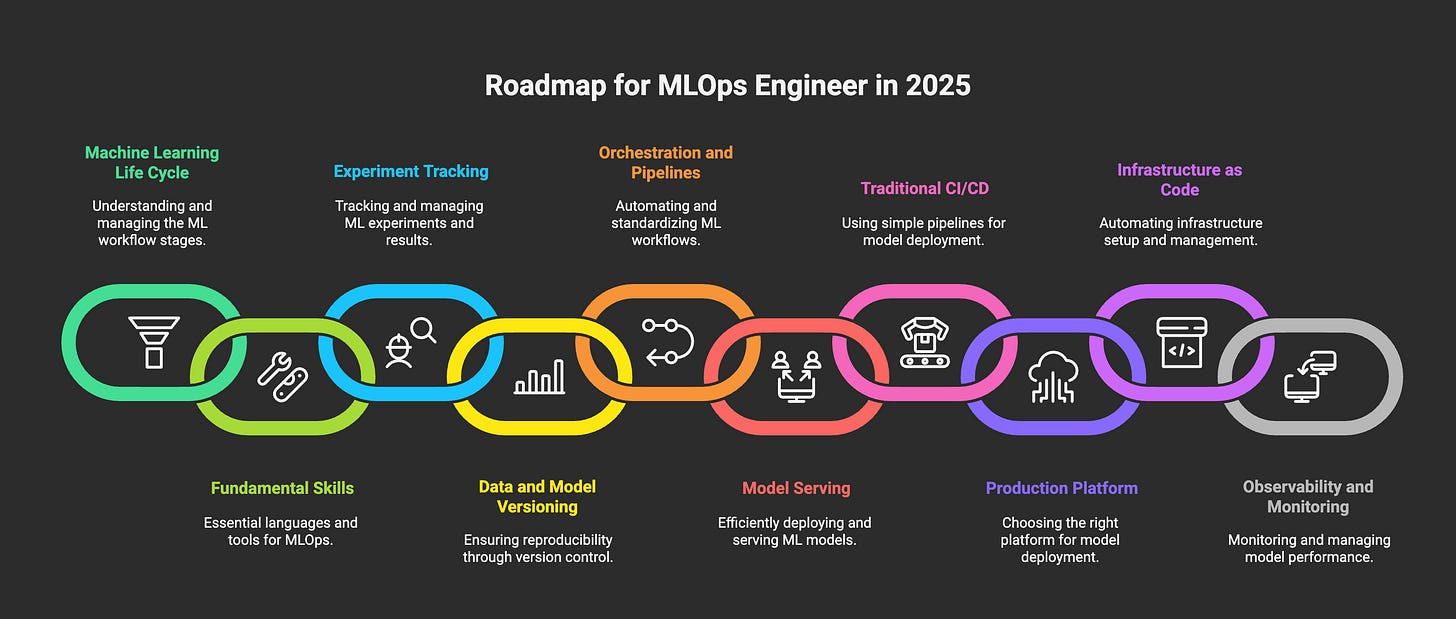

Roadmap to an MLOps Engineer in 2025

Step-by-step guide to mastering the skills and tools you need for a successful MLOps career

Hello Everyone,

Welcome again to the AKVAverse, I’m Abhishek Veeramalla, aka the AKVAman, your guide through the chaos

Before we dive into how you can become an MLOps engineer, I want to clear something up. MLOps is not just about playing with tools or cool technology. It is really about understanding how machine learning fits into the bigger system and how we keep that system running smoothly and without any obstacles, even when it grows bigger.

Imagine building a city. It is not only about the buildings, that is like the models. You also need roads to connect everything, which are like the pipelines. Then there are utilities like electricity and water, similar to the infrastructure that supports everything. Finally, you have traffic control to keep things moving safely, just like monitoring systems do in MLOps.

To be a strong MLOps engineer, you need to understand all these parts, and that is what we will break down together today, step by step.

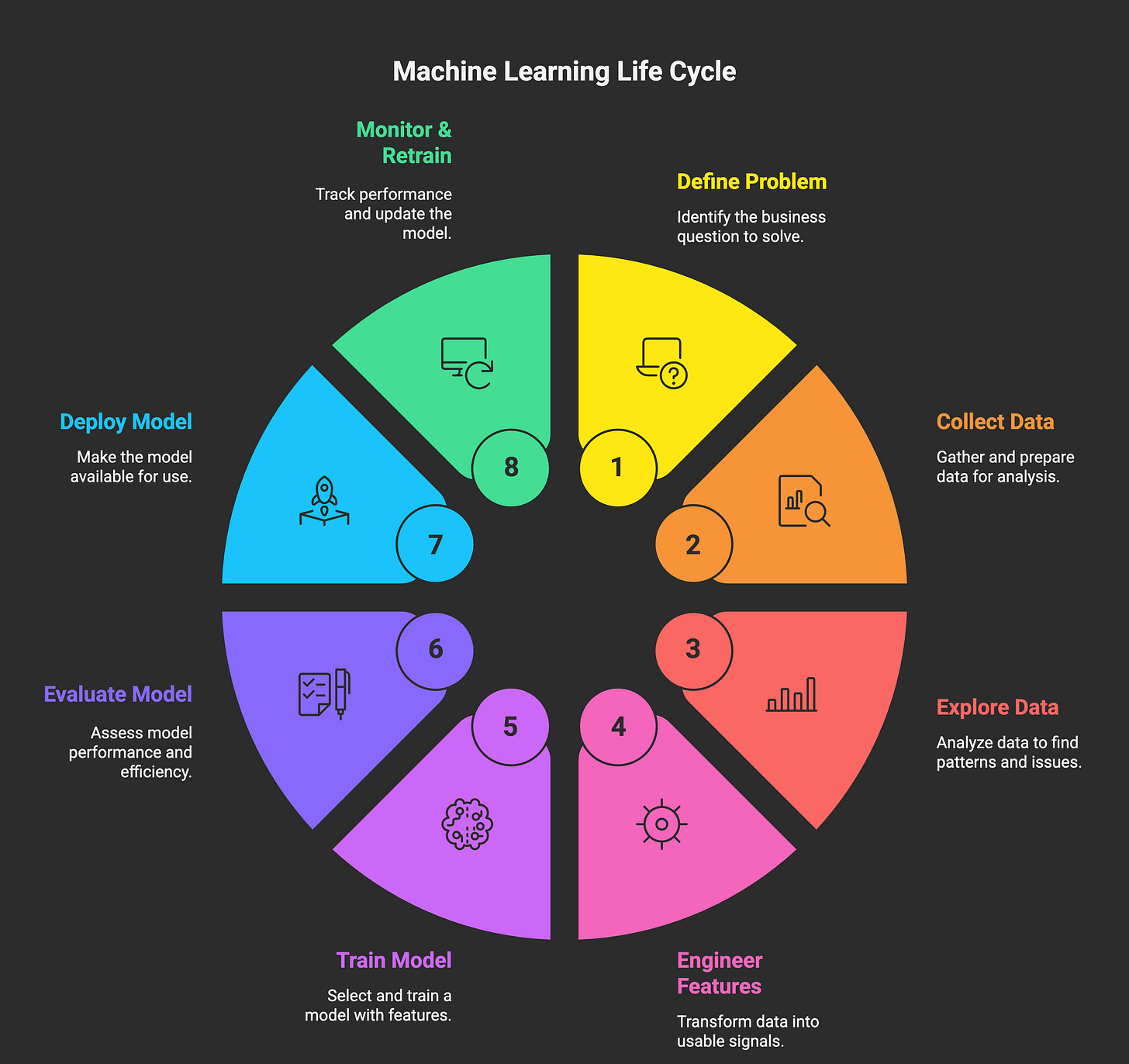

Understand the Machine Learning Life Cycle

Just like we learn about the SDLC (Software Development Life Cycle) when we work in DevOps, MLOps has its life cycle that’s equally important. In DevOps, understanding SDLC helps us build, test, and deploy software smoothly. Similarly, in MLOps, we need to understand the ML life cycle to make sure our machine learning models are built, deployed, and maintained properly.

Let me break it down for you:

Problem Definition: What business question are we actually trying to solve?

Data Collection / Loading: Gathering and getting the data ready, that is already half the fight.

Exploratory Data Analysis (EDA): Dig into your data, find patterns, and fix any issues you spot.

Feature Engineering: Turn raw data into the signals your model can really use.

Model Training: Pick your model and train it with those features.

Model Evaluation: Check if your model is doing a good job and working efficiently.

Deployment & Serving: Put your model out there so real users or systems can use.

Monitoring & Retraining: Keep an eye on how the model performs live, and update it when needed.

Master the Fundamentals Before

Python (Essentials Only): Don’t get distracted by full web or app development. Focus on learning the packages that really matter for MLOps: numpy, pandas, scikit-learn, MLflow, DVC, fastapi, optuna, xgboost, pyaml, pytest, and joblib.

Linux: You will deploy and monitor models mostly on Linux servers, so getting comfortable with the basic commands, permissions, and process management is are must-know skill.

Git & GitHub: Use Git to track your code and model versions, collaborate smoothly with your teammates, and automate workflows using branching and webhooks.

Cloud Fundamentals: Pick one cloud platform AWS, Azure, or Google Cloud, and learn the basics. Understand how compute, storage, and networking work there because that is where your models will run.

Networking: Make sure you know how to troubleshoot request failures, latency issues, and connectivity problems, whether you are working in the cloud or on-premises. These skills will save you a lot of trouble later.

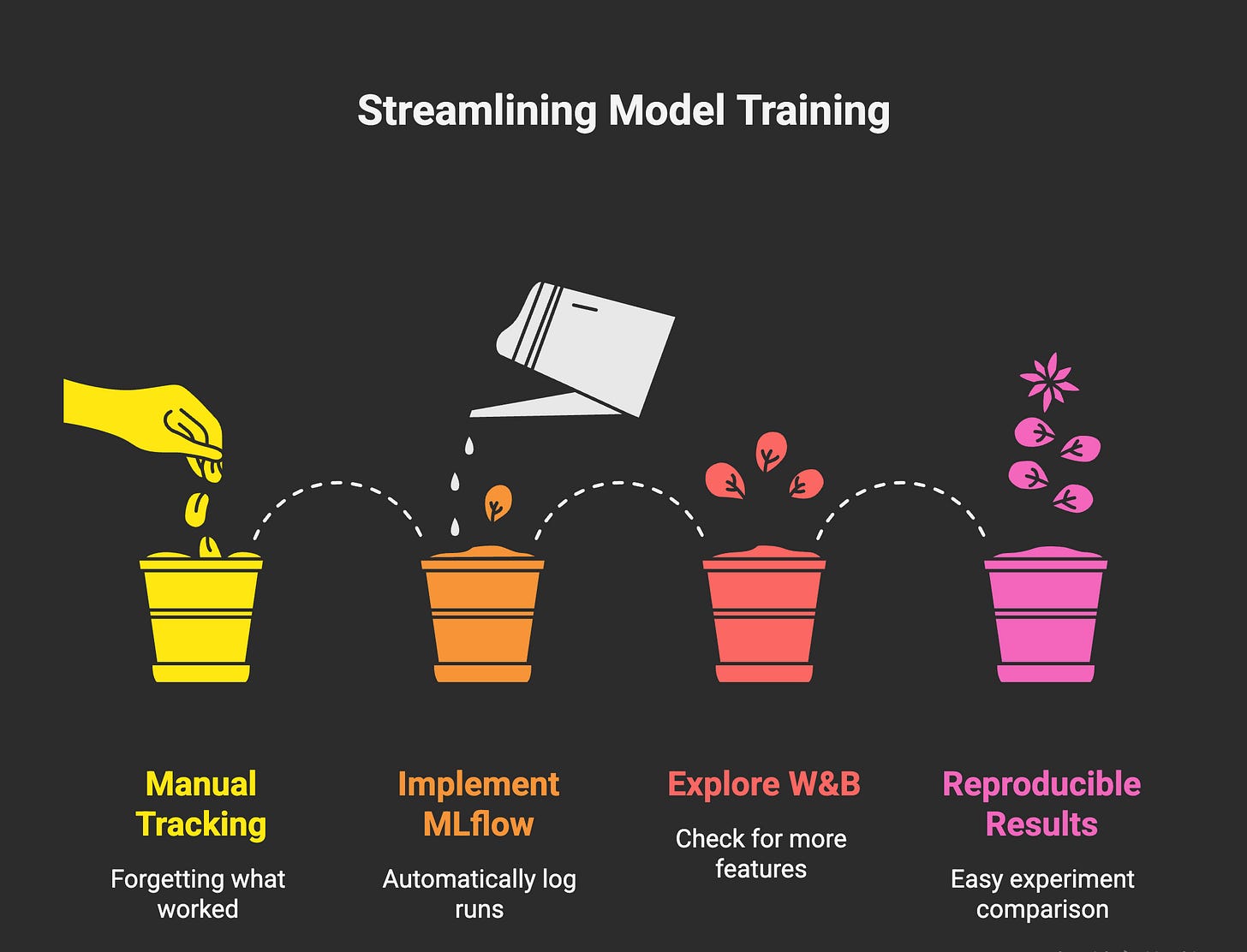

Experiment Tracking

When you are training models, you are trying out a lot of different things, it is super easy to forget what actually worked. That is why always use tools like MLflow to automatically log all your runs, settings, and results.

You might also want to check out Weights & Biases if you need more features. Keeping track like this helps you reproduce your experiments and compare results without any confusion. Trust me, it makes your life way easier.

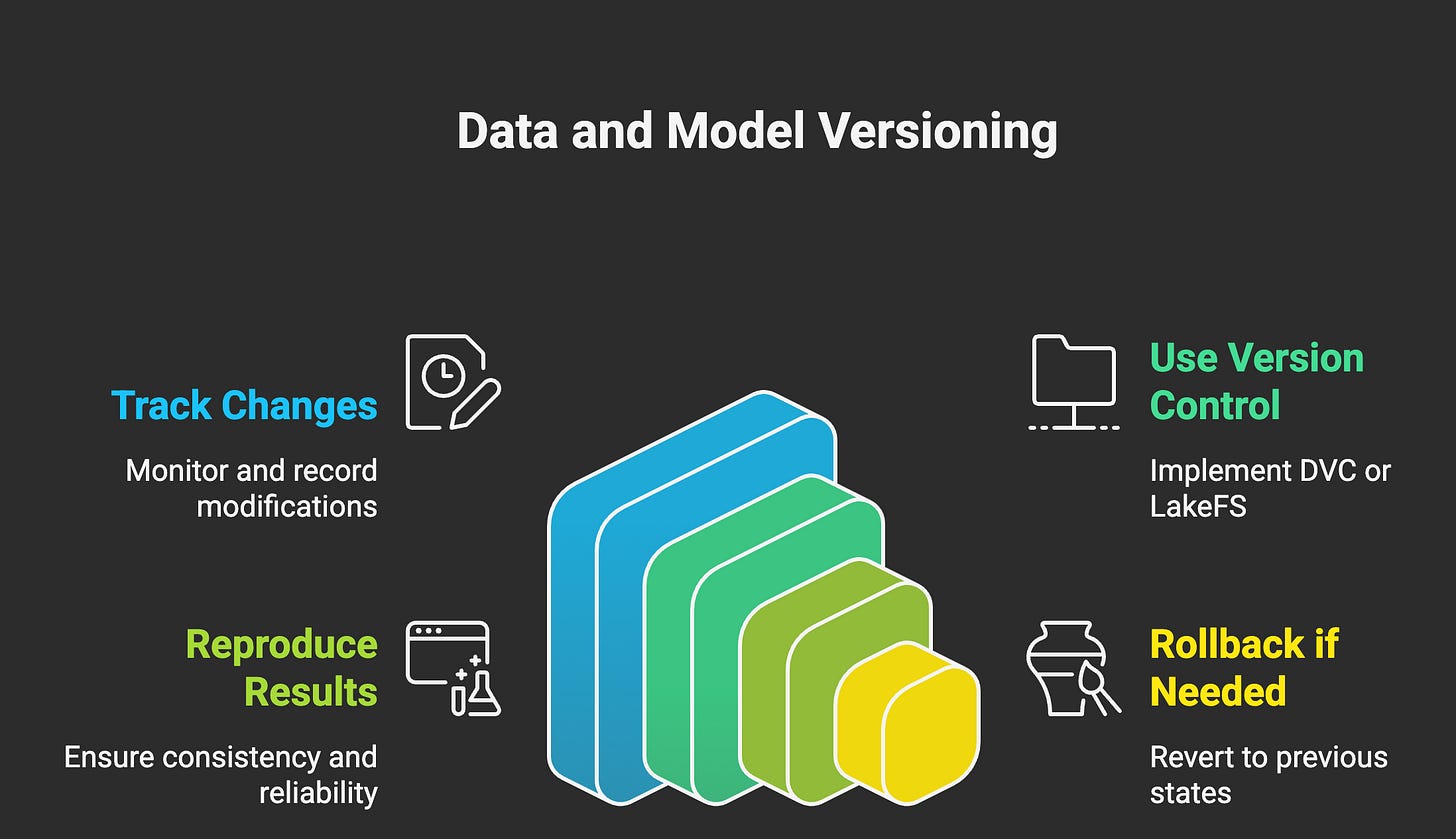

Data and Model Versioning

Data and models don’t stay the same, they are always changing. That is why you need to keep track of every version to avoid getting confused.

I recommend using DVC (Data Version Control) to manage and version your datasets and models. If you want to explore alternatives, LakeFS is worth checking out.

Versioning like this helps you reproduce your results and roll back to a previous state if something goes wrong. It’s a lifesaver for keeping things organized.

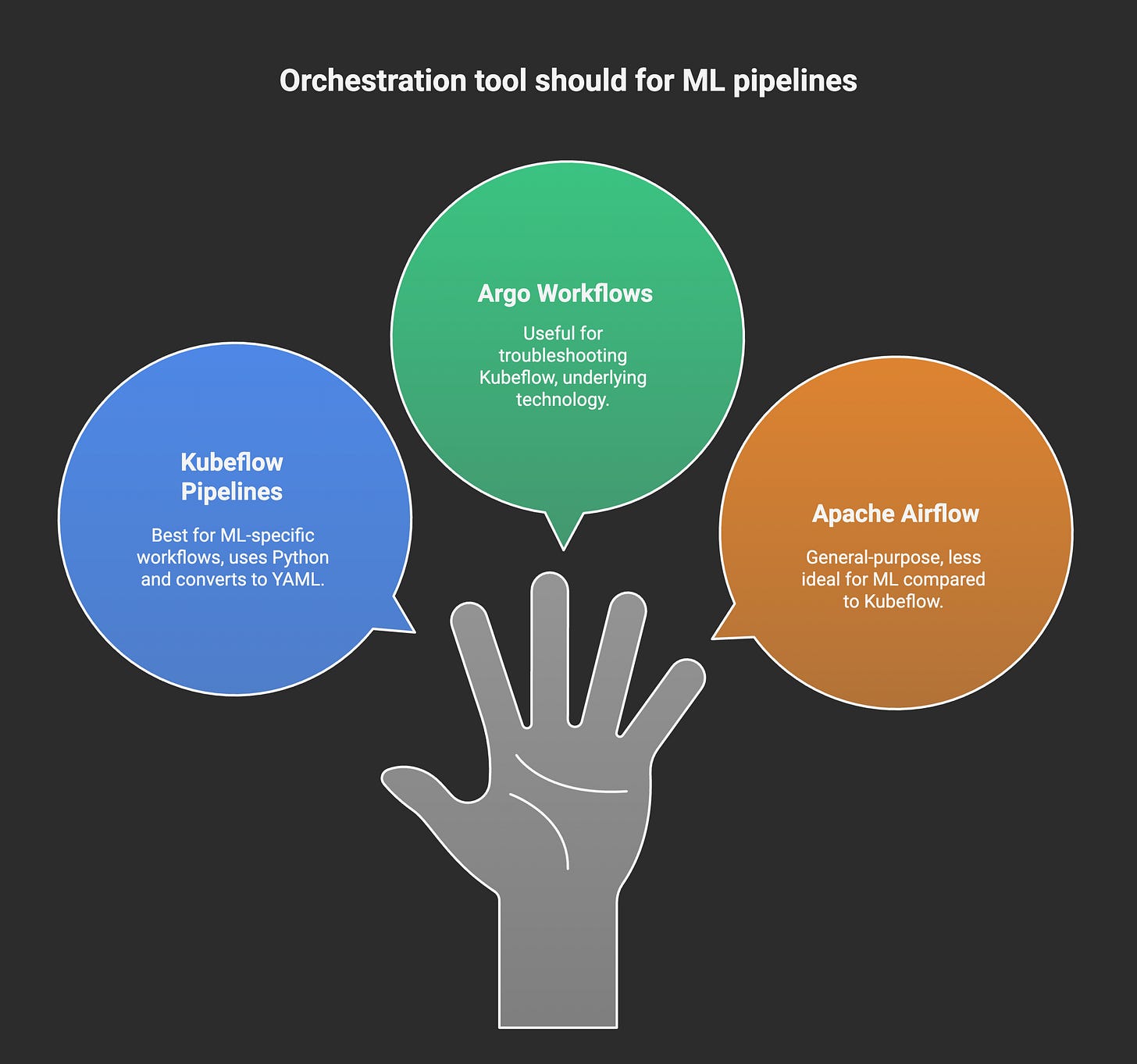

Orchestration and Pipelines

Automate your entire ML workflow by using pipelines. Kubeflow Pipelines lets you build and run these workflows easily. You write your code in Python, and it converts it to YAML for you.

It is also helpful to learn about Argo Workflows since Kubeflow uses it under the hood. Knowing Argo Workflows makes troubleshooting much easier.

You can try Apache Airflow as well, but for ML specifically, Kubeflow is usually the better choice.

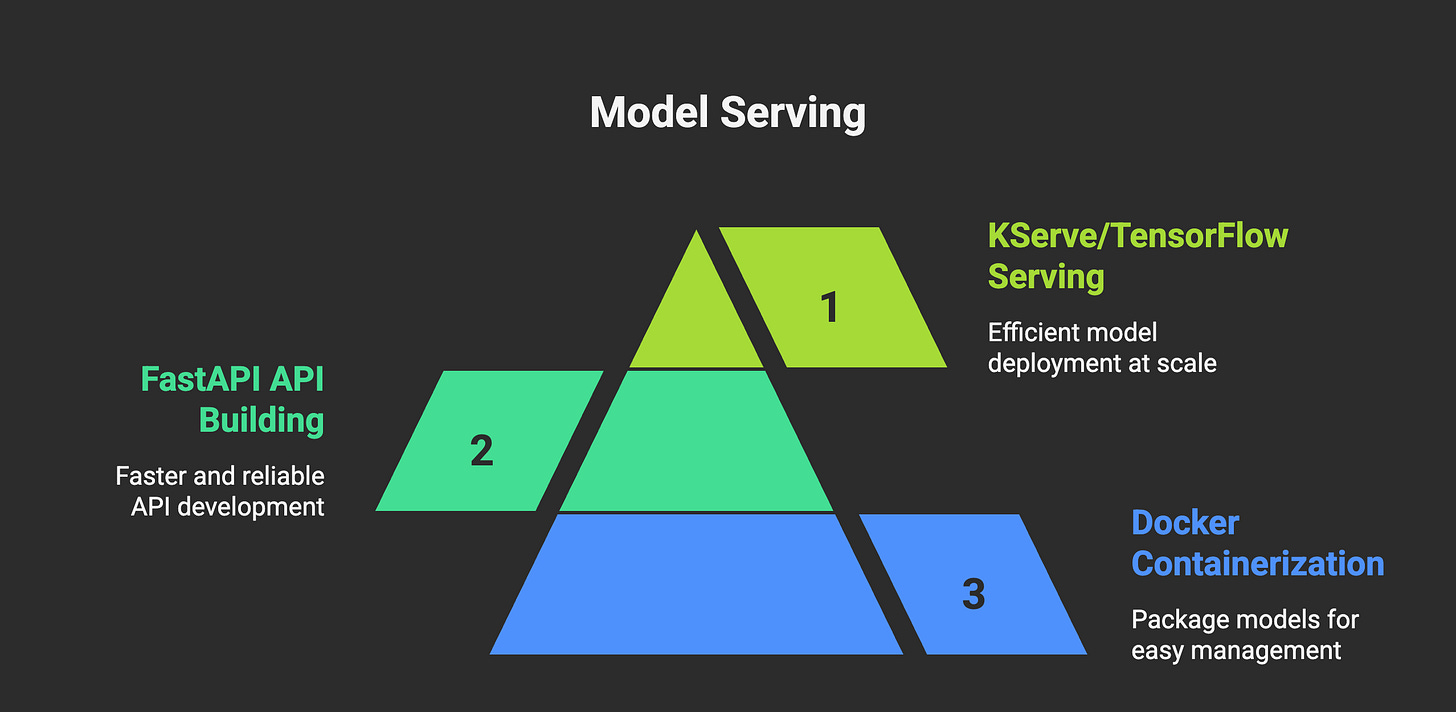

Model Serving

Here is how you package your models for production. Start by using Docker to containerize your models so they become easy to move and manage in different environments. Next, build your APIs with FastAPI because it is faster and more reliable than Flask or Django. When you are ready to deploy at scale, use KServe or TensorFlow Serving on Kubernetes to serve your models smoothly and efficiently.

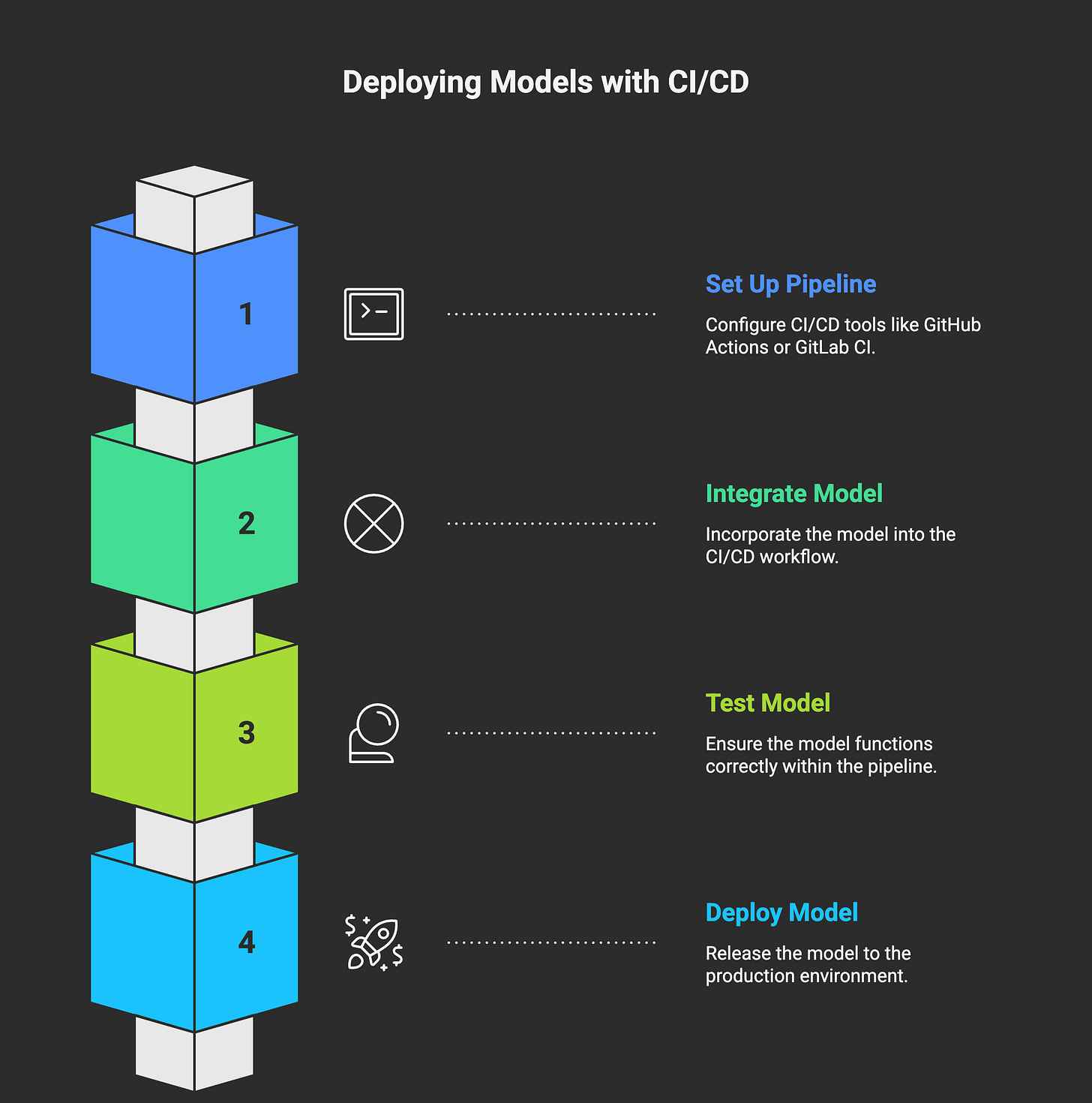

Model Deployment with Traditional CI/CD

For simple projects, you can use CI/CD pipelines like GitHub Actions or GitLab CI to deploy your models quickly and reliably. This method works well when you are part of a smaller team or handling straightforward workflows. However, for more complex projects, you should consider more advanced deployment options.

Production Platform and Model Deployment

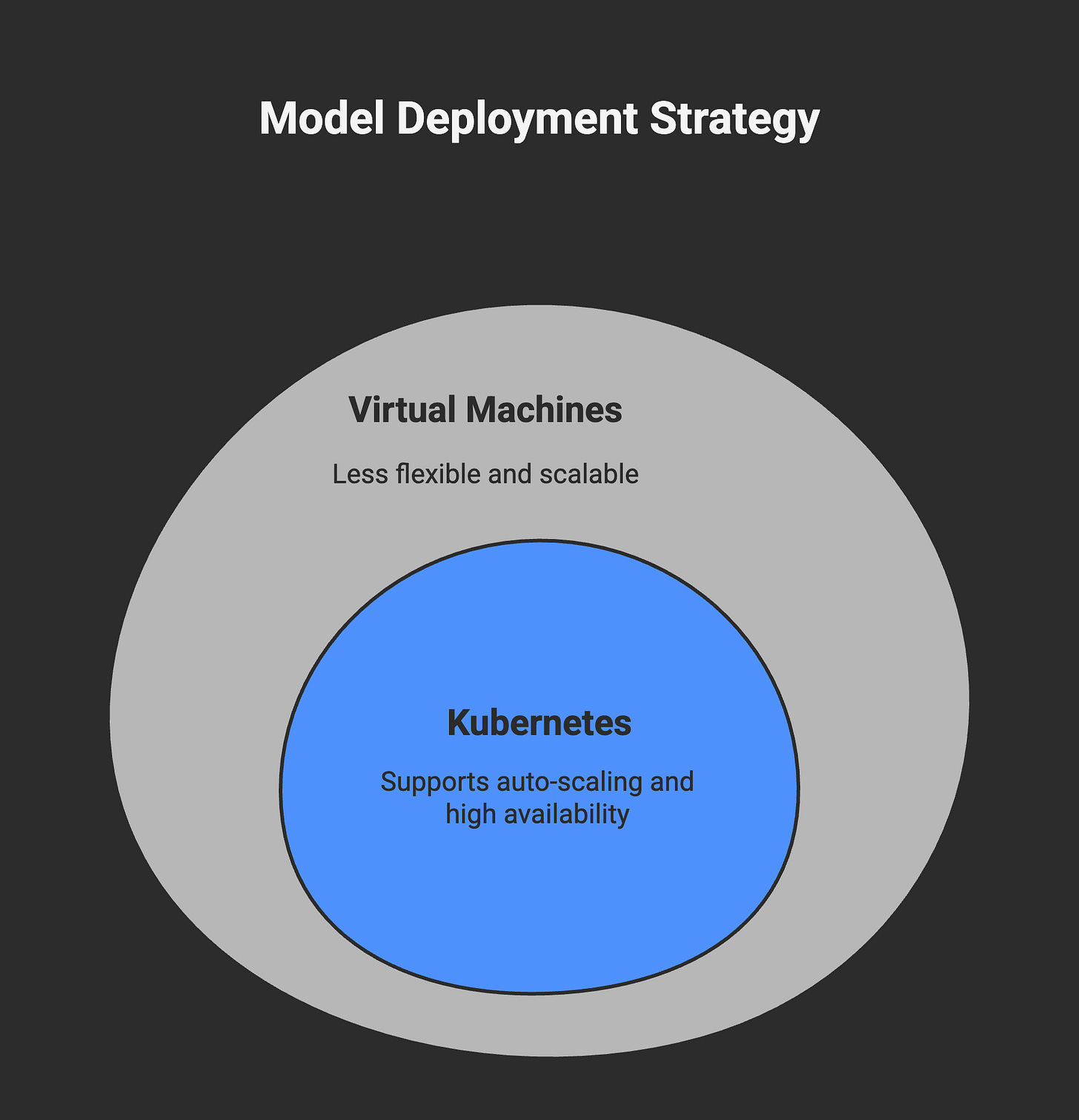

Make Kubernetes your main platform for deploying models because it supports auto-scaling, high availability, and makes your deployment portable. If Kubernetes feels too complex to start with, you can begin using traditional Virtual Machines, but keep in mind that they offer less flexibility and scalability compared to Kubernetes.

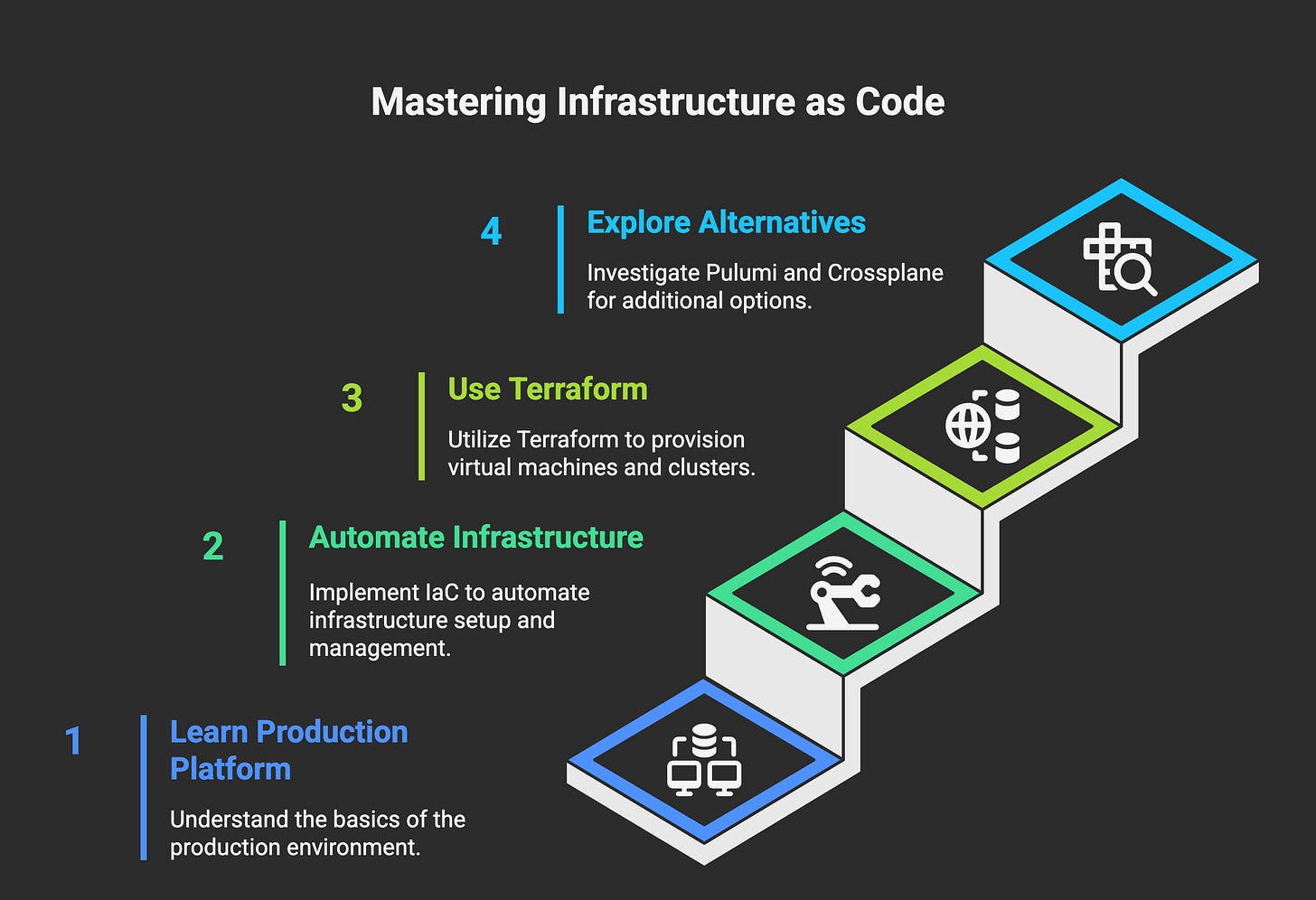

Infrastructure as Code (IaC)

After you get comfortable with the Production Platform, the next step is to learn Infrastructure as Code. This means automating how you set up and manage your infrastructure using code. Start with Terraform to provision virtual machines, Kubernetes clusters, storage, and more. You can also check out Pulumi or Crossplane as alternatives.

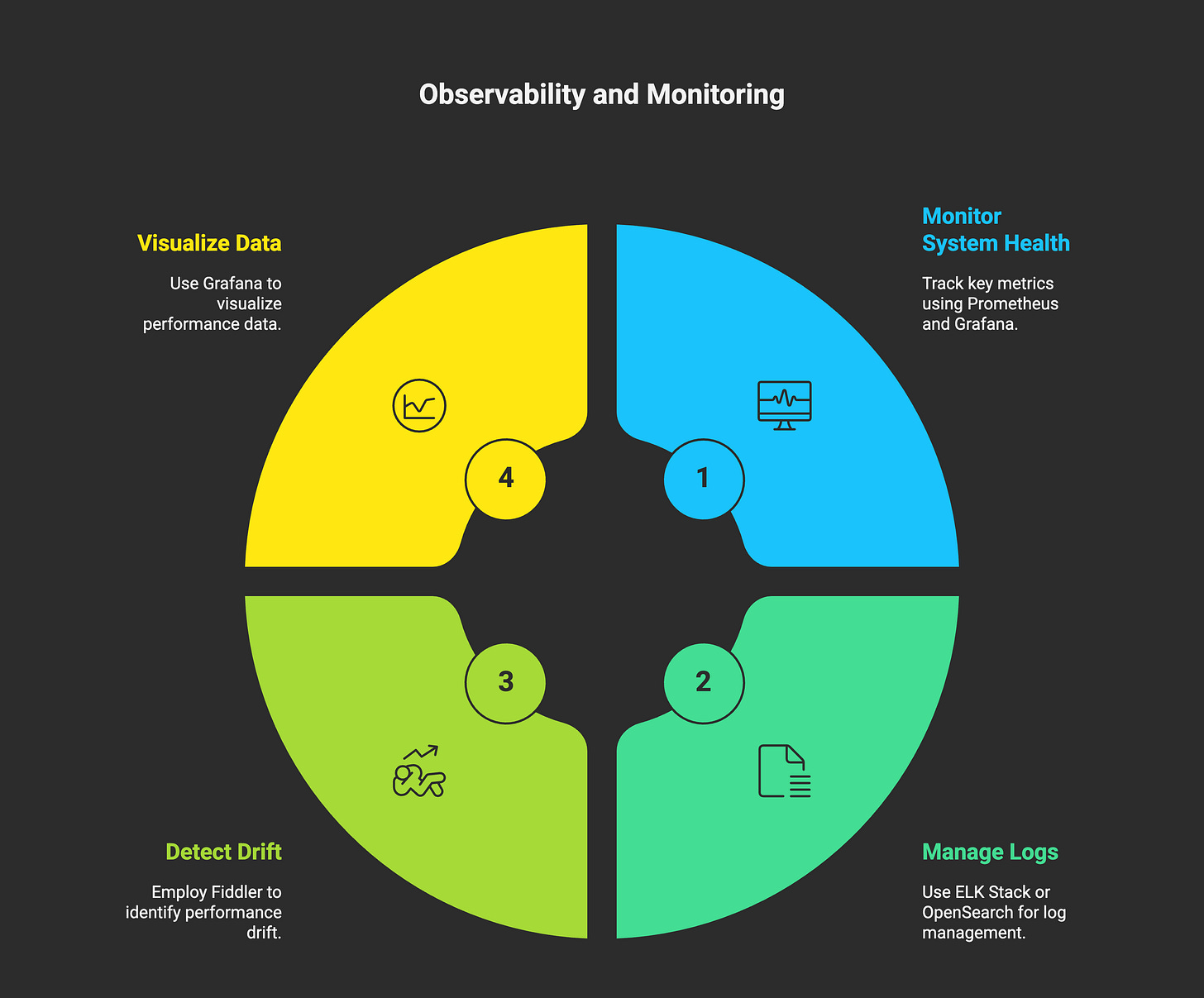

Observability and Monitoring

Once your model is running and performing well, the next important step is monitoring it closely. Keeping a constant eye on your system’s health is crucial. You should learn how to use Prometheus with Grafana to track key metrics and visualize data clearly. It’s also important to get comfortable managing logs with tools like the ELK Stack or OpenSearch. Finally, make sure you know how to monitor your model’s performance over time and detect any drift using tools like Fiddler to keep everything running smoothly.

A Thought to Leave You With

Before you go, remember this: MLOps is a journey, not a sprint. Mastering all these steps takes time and patience. Focus on learning little by little, automating wherever you can, and always keeping an eye on reliability and scalability.

The best MLOps engineers don’t just build pipelines they build trust in their models and systems. Keep experimenting, keep improving, and you will get there.

Start small, stay curious, and get hands-on.

Until next time, keep building, keep experimenting, and keep exploring your AKVAverse. 💙

Abhishek Veeramalla, aka the AKVAman

The best content I've ever read on the subject. Will help me in the project with linux stalks and Hetzner cloud. My Life Mission

Hi Abhishek,

Thank you so much for your knowledge sharing on public platforms, really appreciate it. Since I am in the USA for a few years, I have been working on a couple of DevOps projects in AWS and GCP. Especially in the last few months, I have gained a lot of knowledge on Document AI, Vertex AI, and Agentspace in GCP.

Still, I need your help. I have more than 16+ years of experience in IT with Unix, DBA, Middleware, and Cloud platforms including SRE. I want to move completely into MLOps or any other related field. Could you please share your suggestion? The roadmap is very clear, but I still have some clarifications.

Really, your videos helped me a lot in understanding the concepts with real-time examples.